Member-only story

Storage Credential/External Location in Unity Catalog Enabled Azure Databricks Workspace

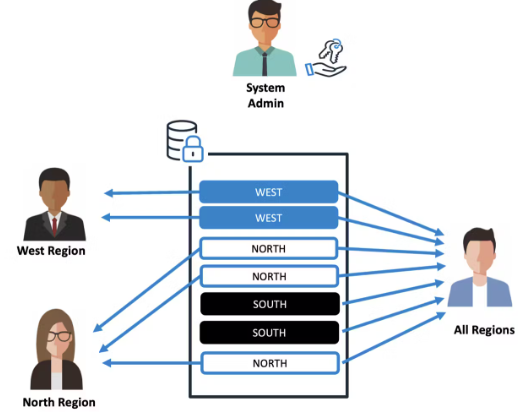

Storage credentials and external locations are subjected to unity catalogue access control policies that control which users can access the storage credential and the external location. This way Unity Catalog provides more fine-grained access control.

In legacy hive metastore access data lake storage using

Access Keys:

spark.conf.set("fs.azure.account.key.<storage-account-name>.dfs.core.windows.net","<access-keys>")SAS Tokens:

spark.conf.set("fs.azure.account.auth.type.<storage-account-name>.dfs.core.windows.net","SAS")

spark.conf.set("fs.azure.sas.token.provider.type.<storage-account-name>.dfs.core.windows.net","org.apache.hadoop.fs.azurebfs.sas.FixedSASTokenProvider")

spark.conf.set("fs.azure.sas.fixed.token.<storage-account-name>.dfs.core.windows.net","<SAS-token>")Service principals:

client_id ="*****"

tenant_id="*****"

client_secret="*****"

spark.conf.set("fs.azure.account.auth.type.<storage-account-name>.dfs.windows.core.windows.net","OAuth")

spark.conf.set("fs.azure.account.oauth.provider.type.<storage-account-name>.dfs.core.windows.net","org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider")…